The basis for audio signal compression is audio compression technology. Audio compression technology refers to the use of appropriate digital signal processing technology on the original digital audio signal stream (PCM encoding) to reduce (compress) its code rate without loss of useful information or negligible loss introduced. This is called compression coding. It must have a corresponding inverse transform, called decompression or decoding. The audio signal may introduce a lot of noise and certain distortion after passing through a codec system. In the field of audio compression, there are two compression methods, namely lossy compression and lossless compression. Common MP3, WMA, OGG are called lossy compression, as the name implies is to reduce the audio sampling frequency and bit rate, the output audio file will be smaller than the original file. Another type of audio compression is called lossless compression, which is the subject matter to be said. Lossless compression can reduce the volume of the audio file on the premise of saving 100% of all data in the original file, and restore the compressed audio file to achieve the same size and the same bit rate as the source file. Lossless compression formats are APE, FLAC, WavPack, LPAC, WMALossless, AppleLossless, La, OpTImFROG, Shorten, and the only common and lossless compression formats are APE and FLAC. 1. Features related to digital audio The quality of digital audio depends on: the sampling frequency and the number of quantization bits. For the fidelity, the sampling points should be as close as possible in the direction of time change, and the sampling frequency should be high; the amplitude value should be as fine as possible, and the quantization bit rate should be high. The direct result is the pressure on storage capacity and transmission channel capacity requirements Audio signal transmission rate = sampling frequency * sample quantization bit number * number of channels sampling frequency = 441KHz Number of quantization bits of sample value = 16 Number of signal channels for ordinary stereo = 2 Digital signal transmission code stream is about 14Mbit / s The amount of data in one second is 14Mbit / (8 / Byte) Up to 1764 k byte (byte), equal to the data volume of 88200 Chinese characters The emergence of digital audio is to meet the needs of copying, storage, and transmission. The amount of audio signal data has a huge pressure on transmission or storage. The compression of audio signals is to express and transmit sound information with the smallest data rate as possible under the condition of ensuring a certain sound quality The signal compression process is to process the signal data on the sampled and quantized original digital audio signal stream using appropriate digital signal processing technology, removing the audio signal from components that can ignore the impact on people's perception of information, only the useful part of the audio Signals are arranged to reduce the amount of data involved in encoding The components contained in the digital audio signal that have a negligible effect on people's perception of information are called redundancy, including time-domain redundancy, frequency-domain redundancy, and auditory redundancy 2. Time domain redundancy The manifestation of time-domain redundancy 1) Non-uniformity of amplitude distribution The quantization bit distribution of the signal is set for the entire dynamic range of the signal. For small amplitude signals, a large number of bit data bits are idle 2) Correlation between samples The sound signal is a continuous expression process. After sampling, the adjacent signals have a strong similarity. The signal difference is much smaller than the signal itself. 3) Correlation of signal period Sound information is in the range of the entire audible domain, and only part of the frequency component is active at every instant, that is, the characteristic frequency. These characteristic frequencies will appear repeatedly with a certain period, and the periods have a correlation 4) Long-term self-correlation The sample value and periodic correlation of the sound information sequence will also be relatively stable in a relatively long time interval, this stable relationship has a high correlation coefficient 5) Mute The pause interval in the sound information, whether it is sampling or quantization, will form a redundancy. Finding the pause interval and removing its sample data can reduce the amount of data 3. Frequency domain redundancy The manifestation of frequency domain redundancy 1) Non-uniformity of long-term power spectral density For any kind of sound information, in a relatively long time interval, the power distribution in the low frequency part is greater than the high frequency part, and the power spectrum has obvious non-flatness. For a given frequency band, there is corresponding redundancy 2) Language-specific short-term power spectral density Speech signals have peaks at certain frequencies and valleys at other frequencies. These formant frequencies have greater energy, which determines different speech characteristics. The power spectrum of the entire language is based on the pitch frequency , Forming a structure that decreases to higher harmonics 4. Hearing redundancy A psychoacoustic model designed based on the analysis of the human ear's limited resolution capabilities in terms of signal frequency, time, etc. will comprehend the complex process of information through hearing, including the reception of information, recognition and judgment, and understanding of the content of the signal. Corresponding consciousness and artistic conception Therefore, all the data in the sound information set does not affect the human ear to distinguish the strength, tone, and orientation of the sound, forming auditory redundancy Hearing redundancy leads to the possibility of lowering the data rate and realizing more efficient digital audio transmission PC Cable:MINI DIN,D-SUB,SCSI.The display connecting line includes the data cable connecting the host computer and the display screen, and the power cable connecting the power supply.

The common data cable types are: HDMI cable, VGA cable and DVI cable. There is also a DP cable for notebook!

This product is suitable for computer and automatic connection cable with rated voltage of 500V and below. K type B low density polyethylene (LDPE) with oxidation resistance is used for insulation of cable ground wire core. Polyethylene has high insulation resistance, good voltage resistance, small dielectric coefficient and small influence of dielectric loss temperature and variable frequency. It can not only meet the requirements of transmission performance, but also ensure the service life of the cable. One PC Cable ShenZhen Antenk Electronics Co,Ltd , https://www.antenkelec.com

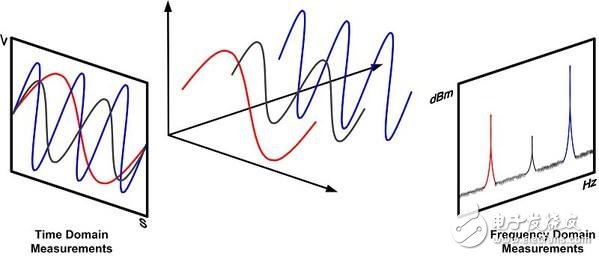

Difference between time and frequency domain

In order to reduce the mutual crosstalk and external interference between loops, the cable adopts shielding structure. According to different occasions, the shielding requirements of cables are as follows: the combined shielding of twisted pair, the total shielding of cable composed of twisted pair, and the total shielding after the combined shielding of twisted pair.

Shielding materials include round copper wire, copper strip and aluminum / plastic composite belt. Shielding pair and shielding pair have good insulation performance. If there is potential difference between shielding pair and shielding pair, the transmission quality of signal will not be affected.

Definition of audio compression technology

May 03, 2023