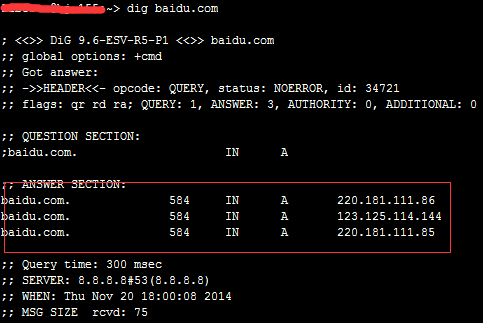

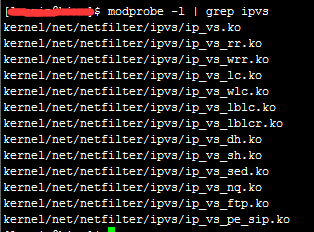

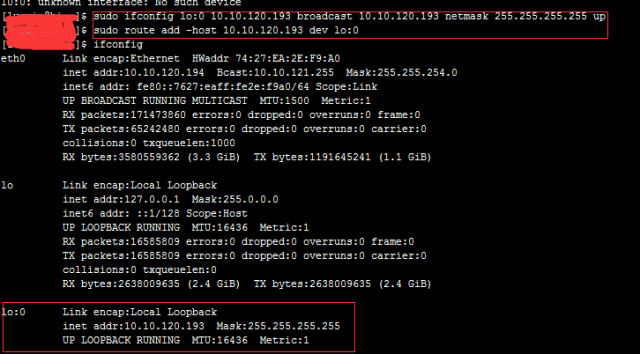

First understand the so-called "equilibrium" It cannot be understood in a narrow sense as the amount of work assigned to all real servers, because the load capacity of multiple servers varies, which may be reflected in hardware configuration, network bandwidth differences, or because a server has multiple roles. What we call "equalization" means that all servers are not overloaded and can function the most. When an http proxy (such as a browser) requests a URL from a web server, the web server can return a new URL via the Location tag in the http response header. This means that the HTTP proxy needs to continue to request this new URL to complete the automatic jump. Performance defects: 1, throughput rate limit The throughput of the primary site server is evenly distributed to the servers being transferred. Now assume that the RR (Round Robin) scheduling strategy is used. The maximum throughput of the sub-server is 1000reqs/s. Then the throughput of the primary server must reach 3000reqs/s to fully play the role of the three sub-servers. If there are 100 sub-servers, then The throughput of the main server can be imagined to be large? Conversely, if the maximum throughput of the primary service is 6000reqs/s, the average throughput to the subserver is 2000reqs/s, and the maximum throughput of the current subserver is 1000reqs/s, so the number of subservers must be increased. Increase to 6 to be satisfied. 2, redirect access depth is different Some redirect a static page, and some redirects are more complex than dynamic pages, so the actual server load difference is unpredictable, while the primary server is ignorant. Therefore, the entire station uses the redirection method to do load balancing is not very good. We need to weigh the overhead of the transfer request and the overhead of processing the actual request. The smaller the former is relative to the latter, the greater the meaning of the redirect, such as downloading. You can go to a lot of image download sites to try, you will find that the basic downloads are redirected using Location. The DNS is responsible for providing the domain name resolution service. When accessing a certain site, the DNS server of the domain name of the site must first obtain the IP address pointed to by the domain name. In this process, the DNS server completes the mapping of the domain name to the IP address. Similarly, this mapping can be one-to-many. At this time, the DNS server acts as a load balancing scheduler. It spreads the user's request across multiple servers just like the http redirected translation policy, but its implementation. The mechanism is completely different. Use the dig command to see the DNS settings for "baidu" It can be seen that baidu has three A records. Compared to http redirects, DNS-based load balancing completely saves the so-called primary site, or the DNS server already functions as the primary site. But the difference is that as a scheduler, the performance of the DNS server itself is hardly worrying. Because the DNS record can be cached by the user's browser or the Internet access service provider's DNS servers at all levels, the DNS server of the domain name will be requested to resolve again only after the cache expires. It is also said that DNS does not have the throughput limit of http. In theory, the number of actual servers can be increased indefinitely. characteristic: 1. Intelligent analysis can be performed based on the user IP. The DNS server can look for the nearest server to all the available A records. 2. Dynamic DNS: Update the DNS server in time when each IP address changes. Of course, because of the cache, a certain delay is inevitable. insufficient: 1. No user can directly see which actual server the DNS resolves to, and the debugging of the server operation and maintenance personnel brings inconvenience. 2. Limitations of the strategy. For example, you cannot introduce the context of an HTTP request into a scheduling policy. In the HTTP redirection-based load balancing system described earlier, the scheduler works at the HTTP level. It can fully understand the HTTP request and design according to the application logic of the site. Scheduling policies, such as reasonable filtering and transfer based on requesting different URLs. 3. If the scheduling policy is to be adjusted according to the real-time load difference of the actual server, this requires the DNS server to analyze the health status of each server during each parsing operation. For the DNS server, this custom development has a high threshold. What's more, most sites just use third-party DNS services. 4, DNS record cache, the cache of different programs of the DNS server of each level node will make you dizzy. 5, based on the above points, the DNS server does not do a good job of balancing the workload, and finally, whether to choose the DNS-based load balancing method depends entirely on your needs. This is definitely everyone's contact, because almost all mainstream web servers are keen to support load balancing based on reverse proxy. Its core job is to forward HTTP requests. Compared to the previous HTTP redirect and DNS resolution, the reverse proxy scheduler plays the role of the user and the actual server intermediary: 1. Any HTTP request to the actual server must go through the scheduler. 2. The scheduler must wait for the HTTP response of the actual server and feed it back to the user (the first two methods do not need to be dispatched and feedback, the actual server sends it directly to the user) characteristic: 1. The scheduling strategy is rich. For example, different weights can be set for different actual servers to achieve a more effective effect. 2. The concurrent processing capability of the reverse proxy server is high because it works at the HTTP level. 3, the reverse proxy server forwarding operation itself requires a certain amount of overhead, such as creating a thread, establishing a TCP connection with the backend server, receiving the processing results returned by the backend server, analyzing the HTTP header information, user space and kernel space frequently Switching, etc., although this part of the time is not long, but when the back-end server handles the request for a very short time, the overhead of forwarding is particularly prominent. For example, requesting a static file is more suitable for using the DNS-based load balancing method described earlier. 4. The reverse proxy server can monitor the back-end server, such as system load, response time, availability, TCP connection number, traffic, etc., so as to adjust the load balancing policy according to the data. 5, the reflection proxy server allows the user to always forward all requests in a session period to a specific back-end server (sticky session), the first advantage is to maintain local access to the session, and second, to prevent the back-end server The memory of the dynamic memory cache is wasted. Because the reverse proxy server works at the HTTP layer, its overhead has severely constrained scalability, which limits its performance limits. Can it achieve load balancing below the HTTP level? NAT server: It works at the transport layer. It can modify the sent IP packet and change the destination address of the packet to the actual server address. Starting with the Linux 2.4 kernel, its built-in Neftilter module maintains a number of packet filtering tables in the kernel that contain rules for controlling packet filtering. Fortunately, Linux provides iptables to insert, modify, and delete filter tables. What's even more exciting is that the IPVS module is built into the Linux 2.6.x kernel. It works in the Netfilter module, but it is more focused on IP load balancing. I want to know if your server kernel has an IPVS module installed. Having an output means that IPVS is already installed. The IPVS management tool is ipvsadm, which provides a command line-based configuration interface that enables a fast load balancing system. This is the famous LVS (Linux Virtual Server, Linux Virtual Server). 1, open the scheduler packet forwarding options Echo 1 > /proc/sys/net/ipv4/ip_forward 2. Check if the actual server has used the NAT server as its default gateway. If not, add it. Route add default gw xx.xx.xx.xx 3, use ipvsadm configuration Ipvsadm -A -t 111.11.11.11:80 -s rr Add a virtual server, followed by the external network ip and port of the server, and -s rr refers to the RR scheduling policy with simple polling (this is a static scheduling policy. In addition, LVS also provides a series of dynamics. Scheduling policies such as minimum connection (LC), weighted minimum connection (WLC), minimum expected time delay (SED), etc. Ipvsadm -a -t111.11.11.11:80 -r10.10.120.210:8000 -m Ipvsadm -a -t111.11.11.11:80 -r10.10.120.211:8000 -m Add two actual servers (no need for external network ip), -r is followed by the internal server ip and port of the actual server, and -m means to use NAT to forward packets. Run ipvsadm -L -n to see the status of the actual server. This is done. Experiments have shown the use of a NAT-based load balancing system. The NAT server acting as a scheduler can increase the throughput to a new level, almost twice that of a reverse proxy server, mostly due to the lower overhead of request forwarding in the kernel. However, once the content of the request is too large, whether it is based on reverse proxy or NAT, the overall throughput of load balancing is not much different. This means that for a large amount of overhead, use simple reverse proxy to build load balancing. The system is value considered. Such a powerful system still has its bottleneck, that is, the network bandwidth of the NAT server, including the internal network and the external network. Of course, if you are not bad, you can spend money to buy Gigabit switches or 10 Gigabit switches, or even load-balance hardware devices, but if you are a reel, what? A simple and effective way is to mix the NAT-based cluster with the previous DNS, such as five 100Mbps egress broadband clusters, and then use DNS to balance user requests to these clusters. At the same time, you can also use DNS intelligent resolution. The area is near. Such a configuration is sufficient for most services, but for large-scale sites that offer services such as downloads or video, NAT servers are not good enough. NAT works in the transport layer of the network layered model (layer 4), while direct routing works at the data link layer (the second layer), which seems to be more savvy. It modifies the packet to the actual server by modifying the destination MAC address of the packet (without modifying the destination IP). The difference is that the actual server's response packet is sent directly to the client carbonyl without going through the scheduler. 1, network settings Here assume a load balancing scheduler, two actual servers, buy three external network ip, one machine one, the default gateway of the three machines needs to be the same, and finally set the same ip alias, here assume the alias is 10.10.120.193 . In this way, the scheduler will be accessed via the IP alias 10.10.120.193. You can point the domain name of the site to this IP alias. 2. Add the ip alias to the loopback interface lo This is to let the actual server not look for other servers with this IP alias and run it on the actual server: Also prevent the actual server from responding to ARP broadcasts from the network for IP aliases. To do this, also execute: Echo"1" > /proc/sys/net/ipv4/conf/lo/arp_ignore Echo"2" > /proc/sys/net/ipv4/conf/lo/arp_announce Echo"1" > /proc/sys/net/ipv4/conf/all/arp_ignore Echo"1" > /proc/sys/net/ipv4/conf/all/arp_announce After the configuration is complete, you can use ipvsadm to configure the LVS-DR cluster. Ipvsadm -A -t10.10.120.193:80 -srr Ipvsadm -a -t 10.10.120.193:80 -r10.10.120.210:8000 -g Ipvsadm -a -t 10.10.120.193:80 -r10.10.120.211:8000 -g -g means forwarding packets using direct routing The biggest advantage of LVS-DR compared to LVS-NAT is that LVS-DR is not limited by the scheduler bandwidth. For example, suppose the three servers are limited to 10 Mbps in the WAN switch exit bandwidth, as long as the connection scheduler and the two actual servers are connected. LAN switch has no speed limit, then, using LVS-DR can theoretically achieve a maximum export bandwidth of 20Mbps, because its actual server response packet can be sent directly to the client without going through the scheduler, so it is with the scheduler. Export broadband has nothing to do with it, it can only be related to itself. And if you use LVS-NAT, the cluster can only use up to 10Mbps of bandwidth. Therefore, the more the response packet far exceeds the service requesting the packet, the more it should reduce the overhead of the scheduler's transfer request, and the more it can improve the overall scalability, and ultimately the more dependent on the WAN export bandwidth. In general, LVS-DR is suitable for building scalable load balancing systems, both web and file servers, as well as video servers, which have excellent performance. The premise is that you must purchase a series of legal IP addresses for the actual device. The request forwarding mechanism based on the IP tunnel: the IP data packet received by the scheduler is encapsulated in a new IP data packet and forwarded to the actual server, and then the response packet of the actual server can directly reach the user end. At present, most Linux supports, can be implemented by LVS, called LVS-TUN. Unlike LVS-DR, the actual server can be on the same WAN network segment as the scheduler, and the scheduler forwards the request to the actual server through IP tunneling technology. , so the actual server must also have a valid IP address. In general, both LVS-DR and LVS-TUN are suitable for responding to and requesting asymmetric web servers. How to choose from them depends on your network deployment needs, because LVS-TUN can deploy the actual server as needed. Different regions, and transfer requests according to the principle of proximity access, so if there is such a need, you should choose LVS-TUN.

Indoor Fixed LED Display is a popular product for its high quality, every year sold to at least 80,000 pieces around the world, including Europe, North America, southeast Asia.Compared to other indoor LED display in the market, its biggest advantage is that it can display high-definition images while maintaining low power consumption.Besides, it adopts Die casting aluminum cabinet which is ultra-thin and ultra-light and owns good heat dissipation.Easy to install and maintain and suitable for multiple indoor scenes.

Application:

Movie theaters, clubs, stages.

Indoor Fixed LED Display,Led Wall Display,Video Wall Display,Outdoor Led Screen Display Guangzhou Chengwen Photoelectric Technology co.,ltd , https://www.cwstagelight.com

* Business Organizations:

Supermarket, large-scale shopping malls, star-rated hotels, travel agencies

* Financial Organizations:

Banks, insurance companies, post offices, hospital, schools

* Public Places:

Subway, airports, stations, parks, exhibition halls, stadiums, museums, commercial buildings, meeting rooms

* Entertainments:

February 25, 2023