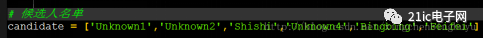

Many people think that face recognition is a very difficult job to achieve. When you see the name, you are afraid. Then you will find a search on the Internet and see the online N-page tutorial. These people include myself. In fact, if you don't have to delve into the principles, just to achieve this, face recognition is not that difficult. Today we will take a look at how to easily implement face recognition within 40 lines of code. a little distinction For most people, distinguishing between face detection and face recognition is not a problem at all. However, there are many tutorials on the Internet that unintentionally describe face detection as face recognition, misleading the masses, causing some people to think that the two are the same. In fact, the problem solved by face detection is to determine that there is a wooden face on a picture, and the problem solved by face recognition is who the face is. It can be said that face detection is the preliminary work of human identification. Today we are going to do face recognition. Tool used Anaconda 2 - Python 2 Dlib Scikit-image Dlib For the main tools to be used today, it is necessary to say a few more words. Dlib is a cross-platform, general-purpose framework based on modern C++. The author is very diligent and has been keeping up to date. Dlib covers machine learning, image processing, numerical algorithms, data compression, etc., and is widely used. More importantly, Dlib's documentation is very comprehensive and the examples are very rich. Like many libraries, Dlib also provides a Python interface. Installation is very simple. You only need one sentence with pip: Pip install dlib The scikit-image that needs to be used above just needs this: Pip install scikit-image · Note: If the installation fails with pip install dlib, it will be more troublesome to install. The error message is very detailed, follow the error prompt step by step. Face recognition The reason why Dlib is used to implement face recognition is that it has done most of the work for us, we only need to call it. There is a face detector in Dlib, a trained face keypoint detector, and a trained face recognition model. Today our main purpose is to achieve, not to delve into the principles. Interested students can go to the official website to view the source code and implementation references. Today's example, since the code does not exceed 40 lines, it is actually not difficult. Difficult things are in source code and papers. First look through the file tree to see what you need today: Prepare a picture of the six candidates in the candidate-faces folder and then identify the face image test.jpg. Our job is to detect the face in test.jpg and then determine who she is among the candidates. The other girl-face-rec.py is our python script. Shape_predictor_68_face_landmarks.dat is a trained face keypoint detector. Dlib_face_recognition_resnet_model_v1.dat is a trained ResNet face recognition model. ResNet is the deep residual network proposed by He Kaiming at Microsoft. He won the ImageNet 2015 championship. By letting the network learn about the residuals, it is more powerful and more powerful than CNN in terms of depth and precision. 1. Preparation Shape_predictor_68_face_landmarks.dat and dlib_face_recognition_resnet_model_v1.dat can be found here. You can't click on the hyperlink and you can enter the following URL directly: http://dlib.net/files/ . Then prepare the face image of several people as the candidate face, preferably the face. Put it in the candidate-faces folder. Here are six pictures prepared here, as follows: They are Then prepare four images of the faces that need to be identified. In fact, one is enough. Here is just a look at the different situations: It can be seen that the first two and the candidate files seem to be different from each other. The third one is the original picture in the candidate, the fourth picture has a slight side face, and the right side has a shadow. 2. Identification process The data is ready, and the next step is the code. The general process of identification is this: ◠Save the candidate descriptors after the face detection, key extraction, and descriptor generation are performed on the candidate. ◠Then perform face detection, key point extraction, and descriptor generation on the test face. ◠Finally, the Euclidean distance between the test face descriptor and the candidate face descriptor is determined. The smallest distance is determined to be the same person. 3. Code The code doesn't explain too much, because the comments have been perfected. Here is girl-face-rec.py # -*- coding: UTF-8 -*-import sys, os, dlib, glob, numpyfrom skimage import ioif len(sys.argv) != 5: print "Please check if the parameters are correct" exit()# 1.People Face key detector predictor_path = sys.argv[1]# 2. Face recognition model face_rec_model_path = sys.argv[2]# 3. Candidate face folder faces_folder_path = sys.argv[3]# 4. Need to be identified Face img_path = sys.argv[4]# 1. Load positive face detector detector = dlib.get_frontal_face_detector()# 2. Load face keypoint detector sp = dlib.shape_predictor(predictor_path)# 3. Load face recognition Model facerec = dlib.face_recognition_model_v1(face_rec_model_path)# win = dlib.image_window()# Candidate face descriptor listdescriptors = []# For each face under the folder: # 1. Face detection # 2. Key points Detection # 3. Descriptor extraction for f in glob.glob(os.path.join(faces_folder_path, "*.jpg")): print("Processing file: {}".format(f)) img = io.imread (f) #win.clear_overlay() #win.set_image(img) # 1. Face detection dets = detector(img, 1) print("Number of faces detected: {}".for Mat(len(dets))) for k, d in enumerate(dets): # 2. Keypoint detection shape = sp(img, d) # Draw face area and key points # win.clear_overlay() # win .add_overlay(d) # win.add_overlay(shape) # 3. Descriptive sub-extraction, 128D vector face_descriptor = facerec.compute_face_descriptor(img, shape) # Convert to numpy array v = numpy.array(face_descriptor) descriptors.append(v) # Do the same for the face to be recognized # Extract the descriptor, no longer comment img = io.imread(img_path)dets = detector(img, 1)dist = []for k, d in enumerate(dets):shape = sp (img, d) face_descriptor = facerec.compute_face_descriptor(img, shape)d_test = numpy.array(face_descriptor) # Calculate Euclidean distance for i in descriptors: dist_ = numpy.linalg.norm(i-d_test) dist.append(dist_) # candidate list candidate = ['Unknown1', 'Unknown2', 'Shishi', 'Unknown4', 'Bingbing', 'Feifei'] # Candidate and distance constitute a dictc_d = dict(zip(candidate, dist))cd_sorted = sorted(c_d.iteritems(), key=lambda d:d[1])print " The perso n is: ",cd_sorted[0][0] dlib.hit_enter_to_continue() 4. Results of the operation We open the command line in the folder where .py is located, run the following command Python girl-face-rec.py 1.dat 2.dat ./candidate-faecs test1.jpg Since the shape_predictor_68_face_landmarks.dat and dlib_face_recognition_resnet_model_v1.dat names are too long, I renamed them to 1.dat and 2.dat. The results are as follows: The person is Bingbing. Students with poor memory can turn up to see who is the picture of test1.jpg. If you are interested, you can try to run four test pictures. What needs to be explained here is that the output results of the first three pictures are very ideal. But the output of the fourth test picture is candidate 4. Comparing the two images makes it easy to find the cause of confusion. After all, the machine is not a human being, and the intelligence of the machine needs people to improve. Interested students can continue to study how to improve the accuracy of recognition. For example, each person's candidate picture uses multiple sheets, and then compares the average value with each person's distance. It’s all by yourself. Ac Motor,220V Servo Motor,Ac Motor With Speed Controller,Remote Motor With Speed Controller NingBo BeiLun HengFeng Electromotor Manufacture Co.,Ltd. , https://www.hengfengmotor.com

December 21, 2022